The Best of ISMIR 2020

Eighth time attending ISMIR and first time attending virtually –some may say we were in Montvirtual instead of Montréal (sorry, not sorry). Despite this pandemic hiccup, the organization was stellar: access to papers, posters, meet ups, and keynotes was surprisingly straightforward. Slack + Zoom is definitely an upgrade to any other virtual conference I attended this year. Seems like we’re making progress in figuring out how to attend conferences from home!

Sad news is that ISMIR 2021, which was supposed to take place in Bangalore, will also take place virtually. Good news is that ISMIR 2022 will take place in Bangalore. I guess my long awaited first ever trip to India will have to wait one more year. Nevertheless, from now on I wish all ISMIR conferences offer a virtual attendance option to not only make it more affordable and more eco-friendly, but also to circumvent the nonsensical visa issues.

In this post I will try to give a brief overview of my favorite talks / presentations of this year’s ISMIR. I have to say that I found this year’s quality much higher than other years, which is inversely proportional to this year’s acceptance rate (~38% as opposed to close to 50%). Some speculate that the fact that having a 1 month extension to our paper submission deadlines might have helped in polishing the submitted works. Another cool thing is that around 50% of its ~800 attendees (highest number of ISMIR attendees ever) were ISMIR first-timers. So good to have many new participants in the field :)

In any case, here you go: the best of ISMIR 2020!

Keynotes

Technically, there was only a single Keynote, but it was fantastic. None other than Dr. Safiya Umoja Noble, the author of Algorithms of Oppression, talked about biases and fairness in Machine Learning. I particularly enjoyed how she related the Big Tech industry with Big Tobacco and Big Cotton. And this can give us a sense of hope: we first see these massive industries as impossible to “regulate,” but then we do, and they become less of a threat than we initially thought. I’m not saying Big Tech will disappear soon, but Dr. Noble’s point is that we should fight to have these big companies be accountable for their extremely worrisome and polarizing practices. And that there’s precedent that this is a feasible thing to do.

After talking with a friend about her keynote, we both agreed that we wished to have seen “Big Meat” up there too, but I guess ISMIR is a technical conference after all, and food is a bit too tangential to talk about.

As part of the Women in MIR talk series, there was a second keynote by Professor Johanna Devaney from the Brooklyn College in CUNY. Prof. Devaney talked about music performance and musical styles, using several MIR techniques to analyze such important aspects of music. Really interesting talk about an aspect of MIR that is often overlooked.

Overall, I am so happy to see these two inspiring women as keynote speakers at ISMIR 2020.

You can watch the keynotes here:

- Dr. Noble’s: https://www.youtube.com/watch?v=a3LutlLru5k

- Prof. Devaney’s: https://www.youtube.com/watch?v=vUQ6s0bsYO8

Top 10 Papers

This is my personal selection, biased by some conversations I had with my team at Pandora and other attendees. Sorted by first author’s last name. Really hard to pick just 10, but here I go.

Multiple F0 Estimation in Vocal Ensembles Using Convolutional Neural Networks - Helena Cuesta et al.

One of the few papers I’ve seen where the phase is used a part of the input to a model. The authors attempt to estimate multiple pitches with CNNs that employ both the magnitude and phase of low-level harmonic representations. They experiment with two different flavors of their CNN architecture and report state-of-the-art results.

Female Artist Representation in Music Streaming - Avriel C. Epps-Darling et al.

I was so happy to see this title when bidding for my papers as a meta-reviewer. First large-scale analysis on gender bias in music streaming services (that I’m aware of!). Turns out that ~1 in 5 spins in Spotify are from female artists, while this imbalance is slightly less pronounced when taking into account non-programmed spins (e.g., directly listening to a specific artist/album/track without automatic/editorial recommendations). This is a fascinating area of research, and lays the foundation to start addressing this problem of bias and fairness in music recommender systems.

Multilingual Music Genre Embeddings for Effective Cross-lingual Music Item Annotation - Elena V. Epure et al.

Very interesting usage of graphs to translate genres across several languages. The authors learn an embedding that is not only effective at this translation, but it also beats previously presented systems. This is a fascinating topic that could particularly help address the discovery of music within in non-western countries.

BebopNet: Deep Neural Models for Personalized Jazz Improvisations - Shunit Haviv Hakimi et al.

This one won the best research award. And it is well deserved: first time I see a clever and effective method to target the output of an MIR system to a specific user. This topic of personalization of MIR tasks has been discussed in the past, but never fully addressed. In this case, the authors are generating jazz improvisation depending on the target audience. Check out the audio examples, they are really impressive!

Multitask Learning for Instrument Activation Aware Music Source Separation - Yun-Ning Hung & Alexander Lerch

I really enjoyed this one. The authors present an end-to-end model that jointly classifies instruments and source separates them from the signal. By doing this in a multi-task fashion, the model yields superior results in several metrics. Very interesting encoding-decoder U-shaped architecture that could potentially be explored in other MIR tasks in the future.

How Music Fans Shape Commercial Music Services: a Case Study of BTS and ARMY - Jin Ha Lee & Anh Thu Nguyen

BTS is a K-Pop (?) band that I had no idea about its existence (yet one of their videos that premiered two months ago has half a BILLION streams on YouTube –so I’m clearly missing out). This band has an army of fans called ARMY, and this study was conducted on ARMY members to better understand how users interact with music streaming services. Some of these fans call BTS “a genre,” which I find fascinating, and part of the findings of this study. Another key point is that the collective experience of the ARMY community makes the music of BTS even more popular.

N.B.: Professor Jin Ha Lee (whom I had the pleasure to have really interesting discussions during this ISMIR) will be one of the main organizers of ISMIR 2021!

Metric Learning vs Classification for Disentangled Music Representation Learning - Jongpil Lee et al.

Jongpil did it again. Wonderful exploration of metric learning (i.e., triplet loss networks) and classification approaches. The authors are able to “disentangle” the space of a given input such that it can explicitly encode four different modalities: genre, mood, instrumentation, and era. The implications of this model are of great value for music recommender systems: with this disentangled space, one could use embeddings to query specific parts of these different disentangled modalities (e.g., “Give me the tracks of a specific genre and mood”).

Pandemics, Music, and Collective Sentiment: Evidence from the Outbreak of COVID-19 - Meijun Liu et al.

Interesting early work on how music consumption has been affected by the pandemic. I’m honestly surprised that the authors were able to analyze these data with such short notice. The results suggest that after the first case of the pandemic is detected in a country, the sentiment of the music streamed is generally more negative. Studies like this could potentially help music recommender systems in dire times (like, e.g., November 3rd?).

Zero-shot Singing Voice Conversion - Shahan Nercessian

The authors present an updated version of AutoVC (Auto-Voice Conversion) such that it can operate on singing voice. They do this in a zero-shot way: no labeled data is needed to convert a given singing voice to a new singing voice. Interesting audio demos: https://sites.google.com/izotope.com/ismir2020-audio-demo

Few-shot Drum Transcription in Polyphonic Music - Yu Wang et al.

Maybe I’m biased due to my profound admiration to any work that comes from Juan Pablo Bello’s lab at NYU, but my bias notwithstanding: this is my favorite paper of ISMIR 2020. The authors propose the usage of Prototypical Networks to be able to transcribe drums automatically given an input example. That is: if you have a little audio snippet, you can query a full audio track and identify where similar sounds occur across the entire track (constrained to drums in this work). Not only the methodology is highly novel, but this has so much potential for any type of query-by-audio application. Really cool!

Bonus Papers

It’s admittedly embarrassing to include your own paper in a “best papers” list, but I wanted to highlight the following: it was validating to see that 3 different works this year showed that listening behavioral data can potentially be more informative at extracting music information from a given track than the audio content of the track itself. If this is something that may interest you, here are the three different works:

-

Should We Consider the Users in Contextual Music Auto-tagging Models? - Karim M. Ibrahim et al.

-

Mood Classification Using Listening Data - Filip Korzeniowski et al.

And if you’re curious about our ISMIR paper, I wrote a little post here.

Tutorials

The first tutorial I attended was the one on Metric Learning. Big names in the field (i.e., Brian McFee, Jongpil Lee, and Juhan Nam) gave this really informative tutorial on one of the hottest topics in MIR. Prof. McFee gave a –dare I say– must-watch overview of linear methods of metric learning (i.e., pre-deep learning era). His explanations of LDA and PCA were excellent. Jongpil went deep into deep learning techniques for metric learning, explaining how they work, and giving a really interesting live-demo on the collab notebook. Finally Juhan gave an overview of the latest advances in metric learning for MIR. Overall, highly recommended tutorial, and all the code that the authors presented is available here: https://github.com/bmcfee/ismir2020-metric-learning

Then I attended the tutorial on Klio, a new open-source framework developed by the Spotify titans. Klio is built on top of Apache Beam to process large quantities of audio data. Interestingly, we at Pandora make use of Beam (running on DataFlow on GCP) to process large-scale audio data too, so it is good to see that other companies are employing similar technologies. Klio seems like a very powerful tool to simplify Beam pipelines that deal with large amounts of audio files.

Workshops

For the first time, ISMIR offered a full day of workshops. I attended NLP4MusA (Natural Language Processing for Music and Audio), organized by some of my colleagues at Pandora. I highly enjoyed the keynote by Colin Raffel, a former colleague from Stanford back when we were young, who after doing a PhD in MIR, landed a job as a Research Scientist at Google Brain where he specialized on NLP tasks and released the impactful T5 model. Currently, he is a Professor in University of North Carolina at Chapel Hill. So, Prof. Raffel tried to convince us to stop training models from scratch when dealing with music: we should use pre-trained models with massive amounts of data, and then fine-tune them to the specific task (similarly to what happens in the NLP world –see BERT). (Shameless plug: that’s basically what we did in our ISMIR paper, using MusiCNN instead of BERT :-)).

NLP4MusA was a big success, and I think it deserves its own blog post to cover all the great material offered. I hope this is the first instance of many to come of this successful workshop!

The Music Program

This year not only the technical program was of the highest quality, but also the music program! Here I leave my two favorite performances:

- A Room with Chacone (Bach) by Seth Thorn:

- I’ll Marry You, Punk Come by Dadabots X Portrait XO:

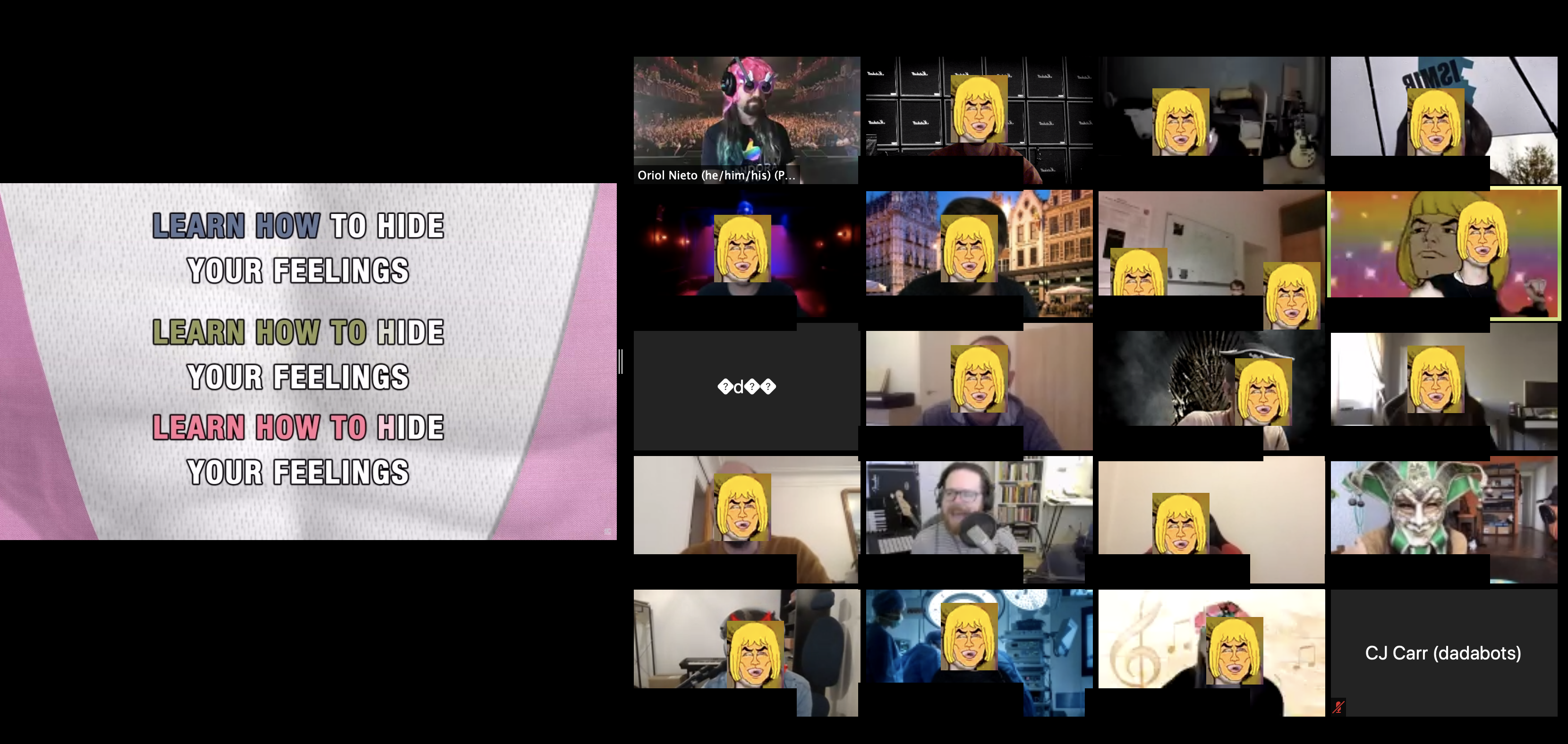

Karaoke Jams

An ISMIR without a Jam Session is like a Harry Potter movie without magic: it is still very interesting, with all of those furry creatures and other socially aware dilemmas and intricate plots, but Harry Potter is a wizard, so people who watch these movies are all passionate about magic. I’m not really sure where I’m going with this. Except that I love Harry Potter and also music.

Since 2015 I’ve had the honor to “chair” the ISMIR Jam Sessions (except in 2017, when I made the mistake of not attending ISMIR), and this year was not supposed to be different except that a thing called Covid-19 came up and made us stay home. Nevertheless, and inspired by the RecSys Karaoke session, I made a last minute Call for Musicians (CfM) and, due to popular demand, three different Karaoke Jams took place in this year’s ISMIR. And it was delightful.

One of the highlights was when someone put on a He-Man mask and sang the epic What’s Up song interpreted by He-Man himself. It was great to see both veterans (e.g., Ryan Groves) and newcomers (e.g., Christine Bauer) participating in this event.

This was a sweet reminder of the massive amount of talent and wonderful sense of community that makes ISMIR the best technical conference ever. Thank you all who participated/attended, and see you again in Zoom next year!

Want more?

Check out other ISMIR 2020 paper selections that these titans put together:

- Ilaria Manco: https://ilariamanco.com/2020/10/18/ismir2020/

- Jordi Pons: https://www.jordipons.me/top-10-ismir-papers-my-selection/

- Hao Hao Tan: https://gudgud96.github.io/2020/10/17/ismir_2020/

- Philip Tovstogan: https://philtgun.me/2020/10/16/ismir/

And don’t forget to check out the officially best papers!